Introduction

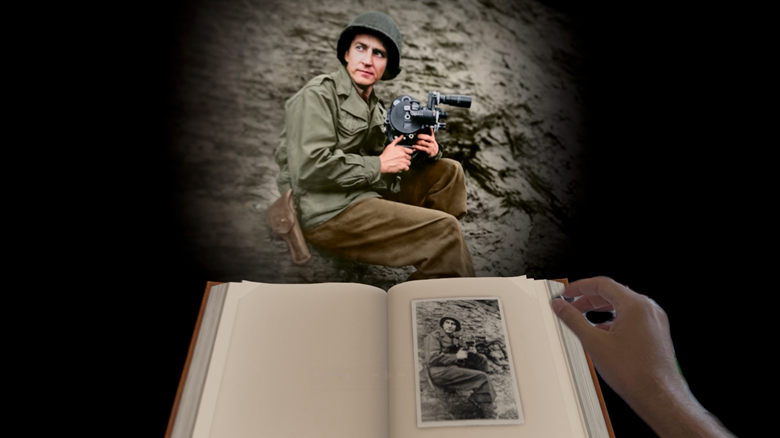

TARGO’s D-DAY: The Camera Soldier redefines what a documentary can be. More than a film, it is a 20-minute immersive experience designed for Apple Vision Pro that blends history, storytelling, and groundbreaking technology. At its center is Jennifer Taylor, who embarks on a personal journey to uncover her father’s hidden role in the D-Day landings. As her story unfolds, so too does a new kind of narrative medium—one that transforms how audiences experience history.

The Challenge

Traditional documentaries often face a limitation: they are bound to the flat screen. While powerful in content, the medium struggles to capture the depth and immediacy of lived experiences. TARGO set out to overcome this barrier by creating a radically new format—one that would make history not just something to watch, but something to step into.

The Solution

TARGO built D-DAY: The Camera Soldier as a mixed-reality experience that dissolves the boundary between audience and story. The project introduced multiple innovations:

A next-generation media player: Developed from the ground up in Unity, it fuses linear video, interactivity, and real-time 3D environments on a single timeline. This invisible infrastructure allows technology to fade into the background so the story can lead.

Mixed-reality television: Extending beyond the traditional frame, the experience projects light, sound, and archival material into the viewer’s room. Historical photos map onto walls, while a custom 3D camera rig transforms 16:9 footage into striking 3D imagery.

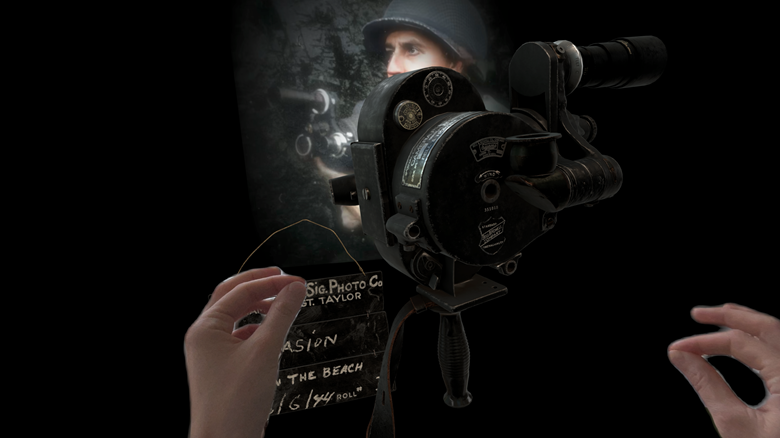

Natural interactions: Through intuitive hand gestures and eye tracking, viewers mirror Jennifer’s actions—turning pages, lifting objects—creating subtle embodiment without disrupting the narrative flow.

Archival 4D remastering: Photographs from 80 years ago are reconstructed as 4D slow-motion environments. Digital twins of objects allow audiences to interact with history in unprecedented ways.

Responsible AI integration: Generative AI supported pre-production concept art, storyboard design, and archival restoration, always under the guidance of historians. Original archival footage was preserved, and transparency was ensured through published disclosures.

The Results

The result is a documentary that sets a new standard for immersive nonfiction. D-DAY: The Camera Soldier demonstrates how deeply personal stories, when paired with technological innovation, can resonate in ways traditional formats cannot. By merging storytelling and immersion, it allows audiences to feel closer to history while honoring authenticity and ethical responsibility.

Unity’s Chief Technology Officer, Steve Collins, described it as: “A radical format innovation. It sets a new standard for storytelling beyond traditional screens.”

For Jennifer Taylor, the experience was both personal and universal—transforming her father’s wartime legacy into an immersive journey for audiences worldwide.

Conclusion

With D-DAY: The Camera Soldier, TARGO has laid the foundation for a new generation of documentaries. It proves that immersive media can carry the weight of history, expand the boundaries of storytelling, and bring audiences into direct contact with the past. Beyond screens, beyond observation, this is the future of documentary: one where history can be stepped into, remembered, and felt.

COMPANY: Targo